Table of Contents

1.1 Definition of Neural Networks

Neural networks or NN are computational models inspired by the structure and functioning of the human brain. They consist of interconnected nodes, or neurons, organized into layers that process and transform input data to produce output. NN have gained immense popularity in machine learning for their ability to learn complex patterns and representations, making them a fundamental component of artificial intelligence systems.

1.2 Historical Context

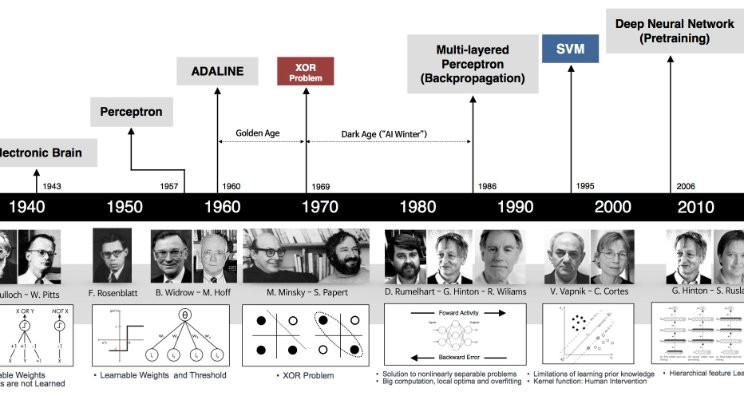

The concept of NN dates back to the 1940s when Warren McCulloch and Walter Pitts proposed a mathematical model of neurons, paving the way for the development of artificial neural networks (ANNs). However, it wasn’t until the 1980s that NN gained prominence due to advances in computing power and the introduction of the backpropagation algorithm, a key technique for training neural networks.

1.3 Importance in Artificial Intelligence

NN play a pivotal role in the field of artificial intelligence (AI). Their ability to learn from data and adapt to complex tasks has led to breakthroughs in image and speech recognition, natural language processing, and even game-playing algorithms. The advent of deep learning, a subfield of machine learning centered around deep neural networks, has further elevated the significance of neural networks in creating sophisticated AI systems.

Foundations of NN

2.1 Neurons and Synapses

At the core of neural networks are artificial neurons, which receive inputs, apply a transformation using weights and biases, and produce an output. These neurons are connected by synapses, analogous to the connections between biological neurons. The strength of these connections (weights) determines the impact of one neuron’s output on another.

2.2 Activation Functions

Activation functions introduce non-linearity to the NN, allowing it to model complex relationships in data. Common activation functions include the sigmoid, hyperbolic tangent (tanh), and rectified linear unit (ReLU), each serving specific purposes in capturing different types of patterns.

Table 1: Comparison of Activation Functions

| Activation Function | Range | Characteristics |

|---|---|---|

| Sigmoid | (0, 1) | Smooth, used in output layer for binary classification. |

| Tanh | (-1, 1) | Similar to sigmoid, centered around zero, helps with zero-centered data. |

| ReLU | [0, ∞) | Rectified Linear Unit, computationally efficient, widely used in hidden layers. |

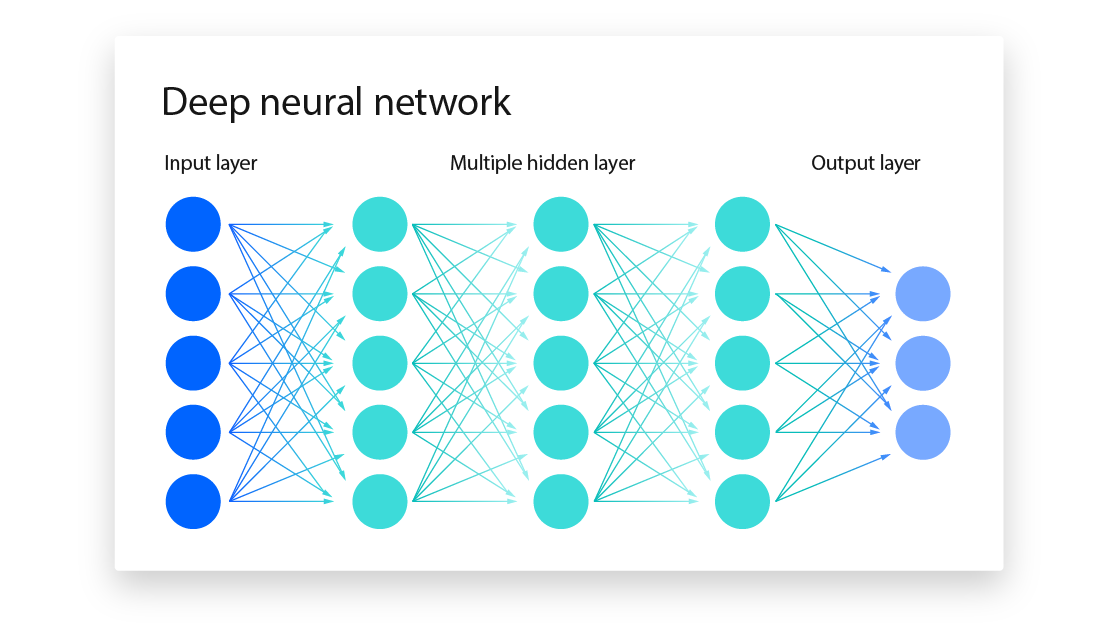

2.3 Layers in Neural Networks

NN are organized into layers: the input layer receives data, hidden layers process information, and the output layer produces the final result. The depth of a NN refers to the number of hidden layers, contributing to its ability to learn hierarchical representations.

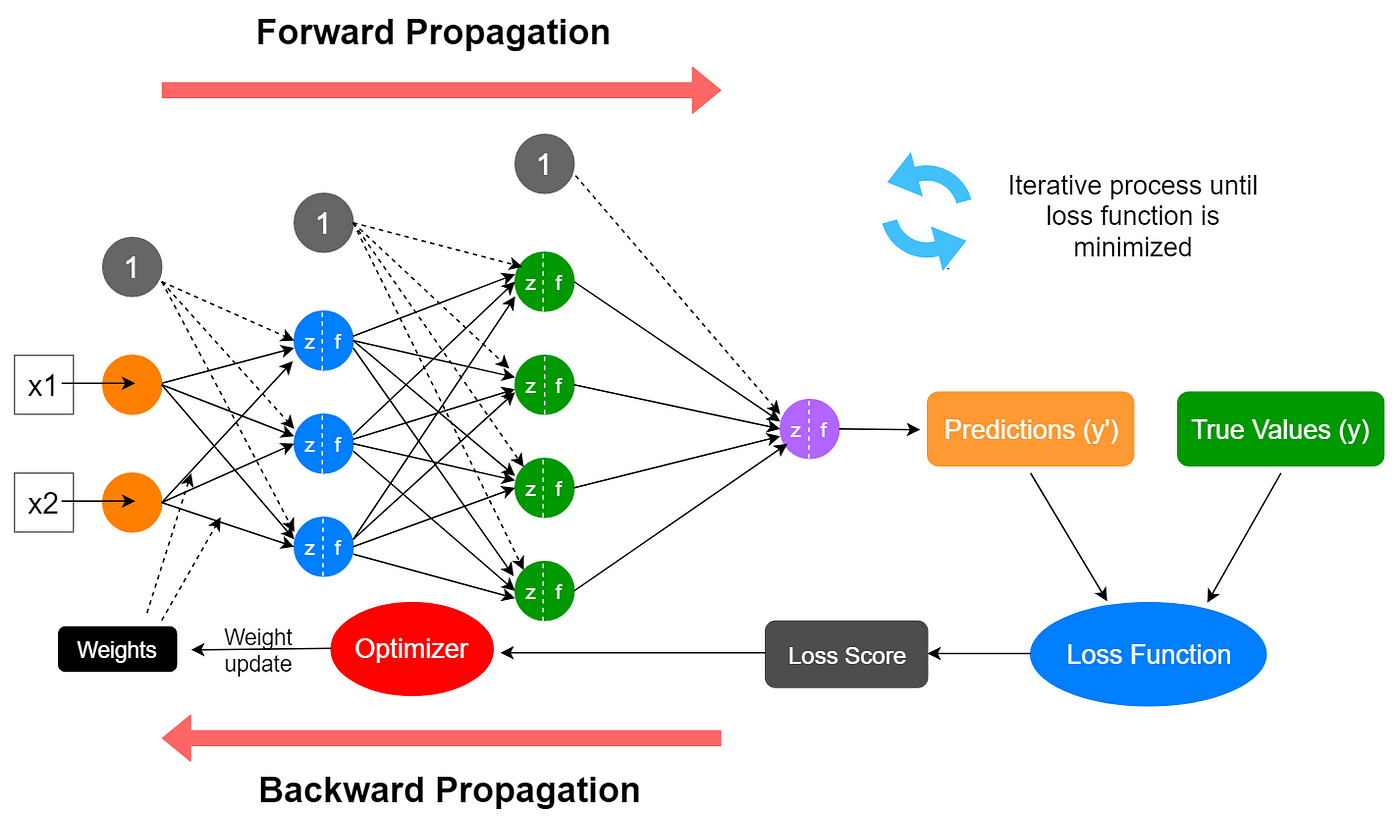

2.4 Training Neural Networks : Backpropagation

Training a neural network involves adjusting the weights and biases to minimize the difference between predicted and actual outputs. Backpropagation is a key algorithm for this task, calculating gradients and updating parameters through the network layers to optimize the model for better performance.

In conclusion, NN represent a cornerstone in artificial intelligence, leveraging the principles of interconnected neurons, activation functions, and layered architectures to learn and generalize from data. Understanding the foundations of NN is crucial for practitioners seeking to harness their power in developing advanced machine learning models.

Types of NN

3.1 Feedforward Neural Networks (FNN)

Table 2: Characteristics of Feedforward Neural Networks

| Attribute | Description |

|---|---|

| Architecture | Unidirectional, no loops or cycles in connections. |

| Use Cases | Image and speech recognition, classification, regression. |

| Training Algorithm | Backpropagation is commonly used. |

| Example Code Snippet | from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Densemodel = Sequential() model.add(Dense(128, activation=’relu’, input_shape=(input_size,))) model.add(Dense(10, activation=’softmax’)) |

3.2 Recurrent Neural Networks (RNN)

Table 3: Characteristics of Recurrent Neural Networks

| Attribute | Description |

|---|---|

| Architecture | Contains cycles, allowing information to persist. |

| Use Cases | Natural language processing, time series prediction, speech recognition. |

| Training Algorithm | Backpropagation through time (BPTT) is commonly used. |

| Example Code Snippet | from tensorflow.keras.models import Sequential from tensorflow.keras.layers import SimpleRNNmodel = Sequential() model.add(SimpleRNN(64, activation=’relu’, input_shape=(time_steps, features))) model.add(Dense(10, activation=’softmax’)) |

3.3 Convolutional Neural Networks (CNN)

Table 4: Characteristics of CNN

| Attribute | Description |

|---|---|

| Architecture | Specialized for grid-like data, e.g., images. |

| Use Cases | Image and video analysis, object detection, facial recognition. |

| Training Algorithm | Backpropagation is adapted with convolutional operations. |

| Example Code Snippet | from tensorflow.keras.models import Sequential from tensorflow.keras.layers import Conv2D, MaxPooling2D, Flatten, Densemodel = Sequential() model.add(Conv2D(32, kernel_size=(3, 3), activation=’relu’, input_shape=(height, width, channels))) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Flatten()) model.add(Dense(10, activation=’softmax’)) |

3.4 Generative Adversarial Networks (GAN)

Table 5: Characteristics of Generative Adversarial Networks

| Attribute | Description |

|---|---|

| Architecture | Comprises a generator and a discriminator, trained adversarially. |

| Use Cases | Image generation, style transfer, data augmentation. |

| Training Algorithm | Adversarial training, involving generator and discriminator networks. |

| Example Code Snippet | from tensorflow.keras.models import Sequential, Modelfrom tensorflow.keras.layers import Dense, LeakyReLU, BatchNormalization, Input, Reshape# Generator modelgenerator = Sequential()# ...# Discriminator modeldiscriminator = Sequential()# ...# Combined GAN modelz = Input(shape=(latent_dim,))img = generator(z)validity = discriminator(img)gan = Model(z, validity) |

Applications of Neural Networks

4.1 Image Recognition and Classification

Neural networks, particularly CNNs, excel in image recognition tasks. They can automatically learn and identify patterns in images, making them invaluable in applications like facial recognition, object detection, and image classification.

4.2 Natural Language Processing

RNNs and other sequential models are widely used in natural language processing tasks. These networks can understand and generate human-like text, enabling applications such as language translation, sentiment analysis, and chatbots.

4.3 Autonomous Vehicles

Neural networks are integral to the development of autonomous vehicles. They process sensor data, interpret the environment, and make decisions in real-time, contributing to tasks like lane detection, object recognition, and path planning.

4.4 Healthcare: Diagnosis and Drug Discovery

In healthcare, neural networks are employed for disease diagnosis and drug discovery. They analyze medical images, predict patient outcomes, and assist in identifying potential drug candidates by understanding complex biological patterns.

In conclusion, the diverse types of neural networks cater to various tasks, from image recognition to natural language processing. Their applications in image classification, autonomous vehicles, healthcare, and beyond showcase the versatility and impact of neural networks in shaping the future of artificial intelligence.

Advancements and Challenges

5.1 Recent Advancements in Neural Networks

Table 6: Recent Advancements in NN

| Advancement | Description |

|---|---|

| Transformer Architectures | Introduction of transformer architectures, e.g., BERT and GPT, revolutionizing NLP. |

| Self-Supervised Learning | Learning from unlabeled data, improving model performance with limited labeled data. |

| Neural Architecture Search (NAS) | Automated exploration of model architectures, enhancing efficiency in model design. |

| Transfer Learning | Leveraging pre-trained models for new tasks, reducing the need for extensive training. |

| Explainable AI | Focus on interpretable models, addressing the “black box” nature of neural networks. |

5.2 Challenges in Neural Networks Development

Table 7: Challenges in NN Development

| Challenge | Description |

|---|---|

| Overfitting | Risk of models learning noise in the training data, impacting generalization. |

| Vanishing and Exploding Gradients | Difficulties in training deep networks due to gradients becoming too small or large. |

| Computational Resources | Resource-intensive training processes, particularly for large neural networks. |

| Lack of Interpretability | Difficulty in understanding and explaining decisions made by complex models. |

| Data Quality and Bias | Dependence on high-quality, unbiased data to avoid perpetuating societal biases. |

5.3 Ethical Considerations of Neural Networks

Ethical considerations in neural network development are paramount. Table 8 outlines key ethical considerations:

Table 8: Ethical Considerations

| Ethical Consideration | Description |

|---|---|

| Bias and Fairness | Ensuring models are fair and unbiased, avoiding discriminatory outcomes. |

| Privacy Concerns | Safeguarding user data and ensuring responsible data handling practices. |

| Accountability and Transparency | Establishing accountability for model decisions and maintaining transparency in AI systems. |

| Security Risks | Addressing vulnerabilities and potential misuse of AI technologies for malicious purposes. |

Comparison with Traditional Machine Learning

6.1 Neural Networks vs. Classical Machine Learning

Table 9: Comparison of Neural Networks and Classical Machine Learning

| Aspect | Neural Networks | Classical Machine Learning |

|---|---|---|

| Model Complexity | Highly complex, capable of learning intricate patterns. | Simpler models, may struggle with complex relationships. |

| Feature Engineering | Automatically learn hierarchical representations from data. | Requires manual feature engineering. |

| Data Size | Benefit from large datasets for optimal performance. | More tolerant to smaller datasets. |

| Interpretability | Often considered as “black boxes,” challenging to interpret. | Generally more interpretable and transparent. |

Real-World Examples

7.1 Google’s AlphaGo

Google’s AlphaGo, a neural network-based AI, demonstrated groundbreaking achievements in playing the complex board game Go. It showcased the ability of neural networks to master intricate strategies and outperform human experts.

7.2 Voice Assistants: Siri, Alexa, Google Assistant

Voice assistants, such as Siri, Alexa, and Google Assistant, leverage neural networks for natural language processing and speech recognition. They exemplify the application of neural networks in creating intuitive and interactive user experiences.

7.3 Facial Recognition Technology

Facial recognition technology utilizes neural networks to identify and authenticate individuals based on facial features. This technology is employed in various sectors, including security, law enforcement, and user authentication.

In conclusion, recent advancements in NN, along with the associated challenges and ethical considerations, shape the landscape of artificial intelligence. The comparison with traditional machine learning highlights the strengths and considerations of NN. Real-world examples, including Google’s AlphaGo, voice assistants, and facial recognition technology, underscore the transformative impact of neural networks in diverse applications.

Implementing of Neural Networks : A Practical Guide

8.1 Setting up the Environment

Before diving into neural network implementation, setting up the environment is crucial. Table 10 outlines the necessary steps:

Table 10: Setting up the Environment

| Step | Description |

|---|---|

| Install Python | Set up Python, a widely-used programming language for machine learning. |

| Choose IDE | Select an integrated development environment (IDE) such as Jupyter Notebook or VSCode. |

| Install Libraries | Install popular libraries like TensorFlow or PyTorch for neural network development. |

8.2 Popular Libraries for Neural Networks

Table 11: Popular Libraries for NN

| Library | Description |

|---|---|

| TensorFlow | An open-source machine learning library developed by Google. |

| PyTorch | A deep learning library developed by Facebook, known for its dynamic computation graph. |

| Keras | A high-level neural networks API, often used as a frontend for TensorFlow or Theano. |

| scikit-learn | A machine learning library that includes tools for neural network implementation. |

| MXNet | A deep learning framework with support for multiple programming languages. |

8.3 Example Code Walkthrough

Let’s walk through a simple example using TensorFlow and Keras for building a feedforward neural network:

# Example Code

import tensorflow as tf

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

# Generate a synthetic dataset

(X_train, y_train), (X_test, y_test) = tf.keras.datasets.mnist.load_data()

X_train, X_test = X_train / 255.0, X_test / 255.0 # Normalize pixel values to between 0 and 1

# Build a simple feedforward neural network

model = Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)), # Flatten the 28x28 input images

Dense(128, activation='relu'),

Dense(10, activation='softmax')

])

# Compile the model

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# Train the model

model.fit(X_train, y_train, epochs=5)

# Evaluate the model on the test set

test_loss, test_acc = model.evaluate(X_test, y_test)

print(f'Test accuracy: {test_acc}')

This example uses the MNIST dataset for digit recognition. It demonstrates the steps of loading data, building a neural network, compiling it, training, and evaluating its performance.

Future Directions of Neural Networks

9.1 Trends and Emerging Technologies

Table 12: Trends and Emerging Technologies in Neural Networks

| Trend | Description |

|---|---|

| Explainable AI | Growing emphasis on developing models that are interpretable and transparent. |

| Federated Learning | Distributed learning across devices, enhancing privacy and reducing data centralization. |

| Quantum Neural Networks | Exploration of quantum computing for accelerating neural network computations. |

| Neuromorphic Computing | Mimicking the structure and functioning of the human brain in hardware architectures. |